Privacy and Information Security: Third‑Party Oversight Case Study

Case study showing how a fintech built a Privacy and Information Security third‑party oversight program using a People, Processes, Platform framework to cut launch delays and reach exam readiness.

Introduction — Before & After

Before: launches stalled because Privacy and Information Security responsibilities lived in separate silos. Vendors handled sensitive data with inconsistent controls, and product releases stopped while teams argued about who owned the risk.

After: a repeatable third‑party oversight program tied vendor privacy and security checks to the product lifecycle. Releases moved on schedule. Regulator questions were handled from a single playbook. The company reached exam‑ready evidence coverage for high‑priority vendors.

This case study shows the custom “People, Processes, Platform” approach we used and the measurable results: faster launches, audit readiness, and progress toward multi‑state licensing.

Background and Core Challenge

The company was a middle‑market fintech providing loan servicing and payments to regional lenders. Growth required new state expansions and product features that touched consumer account data. Exposure included CFPB exam risk for servicing and state privacy obligations for customer data.

Trigger event: a multistate inquiry discovered inconsistent disclosures and vendor control gaps around hosted data flows. That inquiry paused a national rollout. The impact was clear: two major product launches delayed by 6–10 weeks, roughly 400 engineering hours lost, and an estimated $75k in unplanned legal hours.

Stakeholders: General Counsel, Head of Product, VP Engineering, sponsor bank compliance lead, and procurement. Existing tooling—Jira, Confluence, GitHub, AuditBoard—contained pieces of evidence but lacked a central vendor registry or reliable evidence links.

Regulatory context made action urgent. Interagency guidance sets lifecycle expectations for third‑party risk management. CFPB supervisory highlights also show recurring vendor‑related findings that examiners track closely. The program had to close gaps quickly while remaining defensible to examiners.

A quick acronym cheat sheet for the reader: DPIA (Data Protection Impact Assessment), DPA (Data Processing Agreement), SOC 2 (service organization control report), SIG (Standardized Information Gathering questionnaire). Keep these in mind as you read.

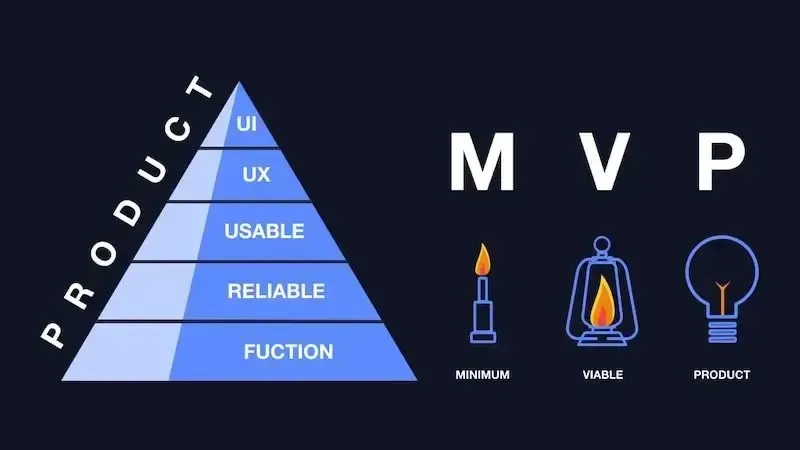

Third‑party oversight: People, Processes, Platform

People, Processes, Platform is the organizing principle.

People: assign clear owners. Product owns intake and business need. Legal owns contracts and DPAs. Compliance owns risk scoring, monitoring cadence, and regulator contact points. Engineering provides technical evidence and access control statements.

Processes: create a lifecycle—intake → assess → contract → monitor → offboard. Tier every vendor (High, Medium, Low) by data sensitivity, business criticality, and access level. Each tier gets a checklist of mandatory evidence and remediation SLAs.

Platform: a central vendor registry that links to Jira tickets, Confluence policy pages, GitHub architecture diagrams, and exam folders. Dashboards show control coverage and open remediation items.

We used regulator and industry templates to ground requirements. The FDIC guide provided practical maturity checkpoints to map program scale. For privacy outcomes, we aligned DPIAs and governance to NIST Privacy Framework recommendations.

Vendor card example (real-format, anonymized):

- Vendor: payment-gateway-xyz

- Risk score: High

- Top 3 gaps: missing pen test, no DPA, weak MFA for admin accounts

- Owner: Engineering lead / Product owner

- Last evidence date: 08/15/2024

- Linked artifacts: DPIA, Jira-1234, Confluence‑vendor‑page, exam folder

That single artifact made vendor reviews fast and repeatable.

Privacy Controls and Data Flows

High‑tier vendors require a DPIA, a signed DPA with subprocessor rules, deletion SLAs, encryption commitments, and breach notification timings. Medium‑tier vendors need a streamlined DPIA and standard DPA clauses. Low‑tier vendors get contract language and annual attestation.

Document data flows visually. Attach a vendor‑data‑flow diagram to every vendor card showing where customer data enters, which services transform it, and where it persists. We used OWASP Threat Dragon for lightweight, versioned diagrams.

Mandatory contract clauses to include: processing scope, subprocessor rules, audit rights, breach notification timelines, and deletion/return obligations. The SANS third-party whitepaper provides practical clause examples to adapt.

Cadence and evidence: high‑tier quarterly, medium semi‑annual, low annual. Attach a DPIA template to each high‑risk vendor record to standardize assessments across product teams. For multi‑state deployments, consult the IAPP state privacy tracker to tailor clauses for state requirements.

Automate discovery where possible. Pull architecture artifacts from GitHub and cloud inventories into the vendor card. When evaluating cloud providers, ask for CSA STAR or Cloud Controls Matrix mappings.

Practical example: one analytics provider processed PII in multiple regions but listed only EU subprocessors. The DPIA flagged storage locations and forced a contract addendum that documented where data lived and how deletion requests would be handled. The addendum cleared a blocker for the product release.

InfoSec Controls and Continuous Monitoring

Define baseline security evidence per tier.

High‑tier: SOC 2 Type II (or equivalent), penetration test report, encryption at rest/in transit, MFA for admin accounts, and an incident response playbook.

Medium‑tier: SOC 2 or ISO attestations and vulnerability scan evidence.

Low‑tier: written security policies and breach notification terms.

Map vendor requirements to CIS Controls as a baseline to ensure actionability. For attestation expectations, use AICPA guidance on SOC 2 to justify requirements.

Continuous monitoring is critical. Add security ratings to spot sudden posture changes between evidence windows. For vendors that support it, set up API‑based evidence pulls or forward key logs to your SIEM. Track KPIs: MTTR for control gaps, percentage of vendors with current evidence, and number of high‑tier vendors with pen‑test acceptance.

Test acceptance criteria in SOWs and require periodic red‑team or penetration testing for vendors that handle sensitive flows. Align vendor security evidence with internal audit and exam‑readiness artifacts to reduce friction during exams.

Mini anecdote: a security rating spike alerted us to an exposed S3 bucket for a medium‑tier vendor. We isolated the connection, required an immediate remediation ticket in Jira, and documented the action in the vendor card. That log proved useful in the subsequent examiner conversation.

Implementation Timeline — Phase by Phase

Phase 1 — Discovery and triage (60–90 days)

We ran a vendor inventory sweep. Procurement and finance exported vendor lists. Engineering delivered cloud and access reports. Product supplied SOWs. We consolidated records into a central registry and ran risk‑scoring sessions.

Deliverables: consolidated vendor registry, risk tiers, and a priority remediation list for the top 20% of vendors that represented most risk.

Immediate actions: issue contractual hold points for unresolved high‑tier vendors, add temporary segmentation, and restrict launches dependent on vendors with unresolved gaps.

Practical anecdote: one payment gateway lacked a current penetration test. Product had already built a release around it. We paused the release, negotiated a fast‑tracked pen‑test with the vendor, and reopened the rollout in four weeks. That single decision saved several engineering sprints of rework.

Phase 2 — Controls and contracting (90–120 days)

We closed controls gaps through contract addenda and evidence requests. Legal pushed standard DPA/SOW addenda. Compliance sent SIG questionnaires for harmonized evidence collection.

Operationally, each remediation became a Jira ticket with an owner and deadline. We used the SANS clause library to standardize language. For vendors without SOC 2, we accepted alternatives: ISO reports, penetration test reports, or documented compensating controls in a control narrative.

Phase 3 — Continuous monitoring and audit readiness (ongoing)

We established evidence refresh cadences and created exam‑ready folders per high‑priority vendor. Quarterly refresh for high‑tier, semi‑annual for medium, annual for low. We ran tabletop exercises simulating vendor breaches and regulator inquiries.

Exam artifacts included policies, vendor registry snapshots, DPAs, SOC reports, DPIAs, penetration test reports, remediation logs, and a steering deck for executives. CFPB exam guidance helped shape the folder contents and narrative.

Onboarding and Governance: How we Embed

Repeatable onboarding flow: intake form → auto risk score → vendor card → contract checklist → Jira evidence tickets → monitoring setup. That flow prevented ad‑hoc exceptions.

RACI and sprint integration: publish a RACI in Confluence mapping Product, Legal, Engineering, and Compliance owners. Embed compliance checkpoints into sprint planning and pull requests so code adding a new vendor needs a compliance greenlight before merge.

Compliance plug‑in: Compliance is embedded into day‑to‑day workflows: attending sprint planning, owning vendor escalations, and representing the company in regulator calls. They draft contract addenda, lead risk workshops, and prepare exam artifacts.

Governance artifacts delivered: vendor intake form, vendor card template, RACI, escalation playbook, contract addendum, and quarterly steering deck.

Escalation path: unresolved high‑risk issues move from Product → Compliance → GC → Board sponsor. Define regulator notification triggers (for example, confirmed exfiltration or a material service outage).

Technical integrations: link vendor cards to GitHub architecture diagrams and OWASP Threat Dragon DFDs, and forward agreed logs to your SIEM where possible.

Small workflow note: we added a single Jira label ("vendor‑evidence") so engineers could filter work tied to vendor remediations without hunting through unrelated tickets.

Outcomes, Metrics and Lessons Learned

Results we measured:

- Vendors inventoried: 312 consolidated to 283 (reduced duplication).

- High‑tier vendors remediated: 22 of 28 within 120 days.

- Evidence coverage: rose from 34% to 86% for required artifacts.

- Time‑to‑launch: median hold time dropped from 7.5 weeks to 2.1 weeks.

- Exam readiness: top 30 high‑priority vendors had complete exam folders.

- Licensing: vendor dependency mapping sped up state filings toward a 50‑state strategy.

Context: third‑party involvement often lengthens breach containment and increases costs. Use the IBM Cost of a Data Breach report when quantifying vendor risk to executives.

Lessons learned:

- Ownership reduces friction. When product, legal, and compliance each have a clear role, remediations close faster.

- Contract language early prevents rework. Insert DPAs and security addenda at negotiation, not after launch.

- Automate evidence where possible. Security ratings and API pulls reduce manual work and surface spikes faster.

- Use shared standards. A single SIG questionnaire avoids vendor fatigue and speeds responses.

Top five repeatable practices:

- Inventory first, then tier. Prioritize remediations by impact.

- Standardize evidence collection with SIG and SOC/ISO expectations.

- Attach a DPIA to every high‑risk vendor record.

- Add security ratings to your monitoring mix.

- Keep an exam‑ready folder per high‑priority vendor aligned to CFPB guidance.

Conclusion and Next Steps

Structured third‑party oversight and embedded compliance leadership make launches predictable.

Next 30/90 day actions: run an inventory sweep, add the contract addendum to active negotiations, and run one tabletop on vendor breach response. If you want a 30/90 readiness review or to discuss embedding fractional compliance leadership, schedule a consult at https://getcomplyiq.com.

FAQs

Q:

How often should we refresh vendor evidence?

A: High‑tier vendors: quarterly. Medium: semi‑annual. Low: annual. Adjust cadence by vendor criticality and recent performance.

Q: Do we need SOC 2 for every vendor?

A:

No. Require SOC 2 Type II for vendors that handle sensitive customer data or perform core processing. For others, accept ISO reports, penetration test results, architecture evidence, or documented compensating controls.

Q: How to handle vendors that refuse contract clauses?

A: Treat refusal as a risk signal. Options: add segmentation, limit data shared, require extra monitoring, or escalate to procurement for alternatives. Document decisions and compensating controls in the vendor card.

Q: What artifacts do regulators expect during an exam?

A: Typical artifacts: vendor registry, DPAs/SOWs, SOC/ISO reports, DPIAs, penetration test reports, remediation logs, and an executive steering deck.

Q: How to measure readiness for a 50‑state licensing strategy?

A: Map vendor control coverage to state privacy obligations, update DPAs and disclosures per state using the IAPP tracker, and verify DPIAs for high‑risk data flows.

Q: Which external resources help map privacy obligations?

A: NIST Privacy Framework for privacy outcomes and DPIA alignment. IAPP state tracker for state law specifics. Shared Assessments for questionnaires and maturity guidance.